Introduction

So let’s say you are getting a lot of incoming requests to your new web app and that one docker container thread isn’t cutting it. You’ll be happy to know that there is a reliable way to handle that traffic without digging into your wallet and helping Jeff Bezos or Thomas Krenn buy themselves another Yacht. If you are using Docker you can just spawn more containers but how do you manage the routing to those new containers? This is where a load balancer comes into play. We’ve looked at that very concept in our previous article and how to automate the TLS certificates for that one node setup.

Goals

In this second part of the haproxy series i’d like to go deeper into this skill. Right now our haproxy relies on someone SSHing to the machine, managing config files and restarting the whole container which inevitably brings the cluster to a halt everytime there is a configuration change. With a vanilla haproxy container a single typo means there is no networking anymore. Fortunately there is a better way: the dataplane API.

-

Dataplane api This haproxy component listen to tcp incoming connections and manages the proxy hosts for you. Everytime you apply a change it is held in memory and gradually applied to the processes in the background which means you have 0 downtime and no spill over effects. All the basic CRUD tasks like adding a new host, deleting ACLs or editing routing rules can be managed with a single rest endpoint.

-

Multi node Say you want to stop a node in your 3 node hypervisor cluster setup. The setup we’ll cover here today allows you to keep your services running and keep those haproxy services in sync without messing with configuration manually and risking introducing typos

If your app is not popular rest assured. You can still use the skills to manage an office full of people that heavily rely on DNS. This can be achieved by setting a load balancer in front of your DNS and we’ll go over http load balancing here today but you can transpose these learning to that use case as well. The setup for a DNS load balancing would be the same since the tool supports tcp forwarding (Layer 4 in the TCP IP protocol stack) as opposed to a solution like the nginx proxy manager’s proxy host who deals with Layer 7 HTTP forwarding.

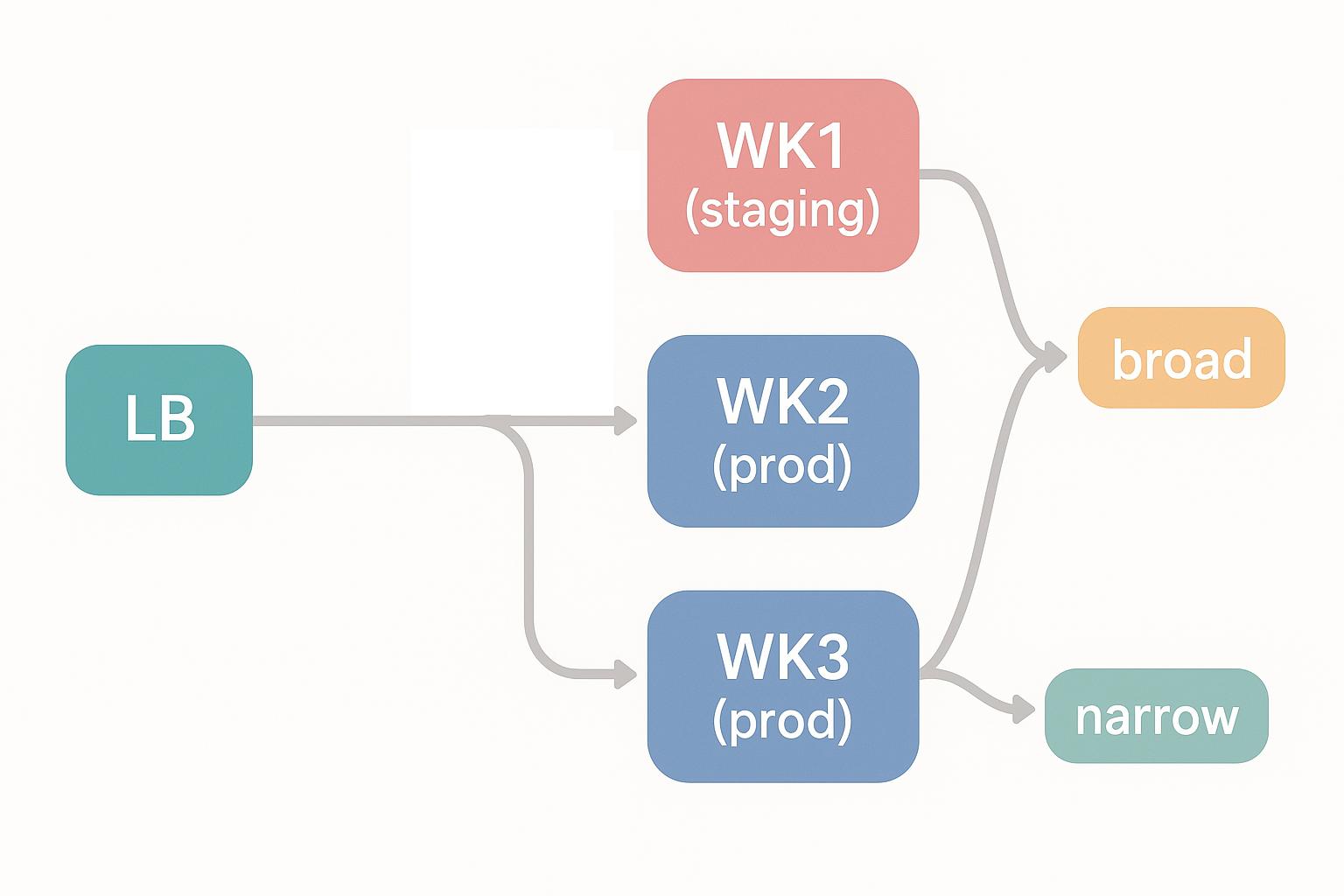

So let’s have a look at the bigger picture of what we intend to build today.

The incoming requests comes from the left into the load balancer and get redirected to an haproxy worker node #2 and worker node #3. Those two nodes could be on the same machine or separate machines. The worker #1 is used for staging our changes and making sure the routing is tested before pushing it into production. Broad and Narrow on the right are two ficticious docker containers.

How do I get this setup to work? Here’s the project structure so that you can get a feel of what the project will look like in the end.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

└── dataplane

├── dataplaneapi

│ ├── dataplaneapi

│ ├── dataplaneapi.yml

│ ├── Dockerfile

│ ├── haproxy.cfg

│ ├── haproxy.cfg.lkg

│ └── reload.sh

├── docker-compose.yml

├── haproxy

│ ├── 404.html

│ ├── Dockerfile

│ ├── haproxy.cfg

│ ├── haproxy-lb.cfg

│ └── sock

├── readme.md

└── haproxy.cfg.template

The dataplaneapi is the master of your configuration which means that once you start using it and edit configuration that was generated by the dataplaneapi, the dataplane will simply overwrite and ignore what you wrote.

Setting up the dataplane

So like we said, the dataplane api is the master and the haproxy is the slave. The docker socket and the haproxy unix socket are both bind mounted in the dataplane container so that it can reload or restart whenever necessary. Both files can be found on the host at these locations:

- ./haproxy/sock/haproxy.sock

- /var/run/docker.sock

The agent responsible for initiating and managing our setup is of course docker compose as always. Client requests come from the load balancer and flow into the haproxy1 and haproxy2 over port 80. The TLS termination is done on the loadbalancer and all subsequent connections go over http.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

services:

haproxy-lb:

build: ./haproxy

image: haproxy:3.2.4

ports:

- "443:443"

- "80:80"

restart: always

container_name: haproxy-loadbalancer

depends_on:

- dataplaneapi

- haproxy1

volumes:

- ./haproxy/haproxy-lb.cfg:/usr/local/etc/haproxy/haproxy.cfg:r

- ./haproxy/404.html:/etc/haproxy/errors/custom_404.html:ro

- /var/lib/docker/volumes/certbot_ssl/_data:/etc/letsencrypt:ro

haproxy1:

build: ./haproxy

image: haproxy:3.2.4

restart: always

container_name: haproxy1

depends_on:

- dataplaneapi

volumes:

- ./dataplaneapi:/usr/local/etc/haproxy/:z

- ./haproxy/404.html:/etc/haproxy/errors/custom_404.html:ro

- ./haproxy/sock:/tmp/:z

haproxy2:

build: ./haproxy

image: haproxy:3.2.4

restart: always

container_name: haproxy2

depends_on:

- dataplaneapi

volumes:

- ./dataplaneapi:/usr/local/etc/haproxy/:z

- ./haproxy/404.html:/etc/haproxy/errors/custom_404.html:ro

- ./haproxy/sock:/tmp/:z

dataplaneapi:

build: ./dataplaneapi

image: dataplaneapi:3.2.3

container_name: dataplaneapi

restart: always

user: root

group_add:

- docker

ports:

- "5555:5555"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- ./dataplaneapi:/dataplane:z

- ./haproxy/sock:/tmp/:z

I’ve opted to build the Dockerfile from scratch because I need to restart the docker host from the container.

- ./dataplaneapi/Dockerfile

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

FROM debian:bookworm-slim

# Install dependencies + HAProxy

RUN apt-get update && apt-get install -y \

curl \

bash \

ca-certificates \

haproxy \

docker.io \

&& rm -rf /var/lib/apt/lists/*

ARG DPL_VERSION=3.2.3

RUN curl -sSL https://github.com/haproxytech/dataplaneapi/releases/download/v${DPL_VERSION}/dataplaneapi_${DPL_VERSION}_Linux_x86_64.tar.gz \

| tar -xz -C /usr/local/bin \

&& chmod +x /usr/local/bin/dataplaneapi

RUN mkdir -p /dataplane && chmod 777 /dataplane

COPY dataplaneapi.yml /dataplane/dataplaneapi.yml

COPY haproxy.cfg /dataplane/haproxy.cfg

COPY reload.sh /dataplane/reload.sh

RUN chmod 777 /dataplane/reload.sh

RUN chmod 777 /tmp

EXPOSE 5555

ENTRYPOINT ["/usr/local/bin/dataplaneapi"]

CMD ["-f", "/dataplane/dataplaneapi.yml"]

- ./dataplaneapi/dataplaneapi.yml

This is the “systemd”-like unit file used to configure the dataplane daemon.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

config_version: 2

name: 3c66442a41ca

dataplaneapi:

host: 0.0.0.0

port: 5555

advertised:

api_address: ""

api_port: 0

scheme:

- http

userlist:

userlist: dataplane_users

userlist_file: ""

haproxy:

config_file: /dataplane/haproxy.cfg

haproxy_bin: /usr/sbin/haproxy

reload:

reload_delay: 10

reload_cmd: sh /dataplane/reload.sh

restart_cmd: sh /dataplane/reload.sh

reload_retention: 1

reload_strategy: custom

validate_cmd: docker exec haproxy1 /usr/local/sbin/haproxy -c -f /usr/local/etc/haproxy/haproxy.cfg

- ./dataplaneapi/reload.sh

In the first command, instead of killing the container, HAProxy hands off existing connections to the new process.

The old process continues serving those connections until they finish, then exits gracefully. New connections go to the freshly started HAProxy process.

1

2

3

4

5

6

7

8

9

10

#!/bin/sh

set -e

PID_FILE=/tmp/haproxy.pid

if [ -f "$PID_FILE" ] && [ -s "$PID_FILE" ]; then

OLD_PID=$(cat $PID_FILE)

/usr/bin/docker exec haproxy1 sh -c "/usr/local/sbin/haproxy -f /usr/local/etc/haproxy/haproxy.cfg -p $PID_FILE -sf $OLD_PID"

else

/usr/bin/docker exec haproxy1 sh -c "/usr/local/sbin/haproxy -f /usr/local/etc/haproxy/haproxy.cfg -p $PID_FILE"

fi

- ./haproxy/Dockerfile

Here we are building the worker containers

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# haproxy/Dockerfile

FROM haproxy:3.2.4-alpine

USER root

# Install socat for HAProxy runtime socket commands

RUN echo "http://dl-cdn.alpinelinux.org/alpine/v3.22/main" > /etc/apk/repositories \

&& echo "http://dl-cdn.alpinelinux.org/alpine/v3.22/community" >> /etc/apk/repositories \

&& apk update \

&& apk add --no-cache socat curl bind-tools

USER haproxy

# Copy the config into the image

COPY haproxy.cfg /usr/local/etc/haproxy/haproxy.cfg

I was experiencing some weird behaviors and wanted to use the socat command to read the health checks that are stored in teh dataplane container. In the end it turns out the issue was with the MTU because of my virtualized networking. If you’re interested in querying the proxy host states you can do it like so:

1

2

3

4

5

[root@haproxy-wk1 haproxy]# docker exec -it haproxy1 sh -c "echo 'show servers state' | socat stdio /tmp/haproxy.sock | awk '{print \$4, \$12}'"

[root@haproxy-wk1 haproxy]# watch -n 1 'docker exec -it haproxy1 sh -c "echo '\''show servers state'\'' | socat stdio /tmp/haproxy.sock | awk '\''{print \$4, \$12}'\''"'

srv_id srv_check_status

app1-staging 3

The numeric code of “the last health check” map to (0=UNKNOWN, 1=UP, 2=DOWN, 3=MAINT, etc.)

- ./haproxy/haproxy-lb.cfg

This configures the load balancer daemon.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

global

daemon

maxconn 256

log stdout format raw daemon

defaults

mode http

timeout client 10s

timeout connect 5s

timeout server 10s

timeout http-request 10s

log global

option httplog

frontend http-in

bind *:80

mode http

# Redirect all requests to HTTPS

http-request redirect scheme https code 301

frontend https-in

bind *:443 ssl crt /etc/letsencrypt/live/example/haproxy.pem

mode http

# make sure the client's IP gets recorded in the header

# so that we can make certain backends only accessible

# on the intranet

option forwardfor header X-Real-IP

# ACL for internal networks

acl internal src 10.0.0.0/8 192.168.0.0/16 172.16.0.0/12

http-request set-header X-Forwarded-Proto https if { ssl_fc }

# Hostname-based routing

acl example_subdomain hdr_reg(host) -i ^([a-z0-9-]+)\.example\.com$

use_backend example-backend if example_subdomain

# fallback

default_backend fallback-backend

backend example-backend

mode http

option http-server-close

balance source

server haproxy1 haproxy1:80 send-proxy

server haproxy2 haproxy2:80 send-proxy

backend fallback-backend

mode http

http-request return status 404 content-type "text/html" lf-file /etc/haproxy/errors/custom_404.html

- ./haproxy.cfg.template

- ./haproxy/haproxy.cfg

- ./dataplaneapi/haproxy.cfg

Before starting the containers the template should be copied to both locations to be used by both services

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

global

daemon

maxconn 256

stats socket /tmp/haproxy.sock mode 600 level admin

log stdout format raw daemon

defaults

log global

timeout connect 5s

timeout client 10s

timeout server 10s

userlist dataplane_users

user admin insecure-password <redacted>

frontend http-in

mode http

bind *:80 accept-proxy

http-request set-header X-Forwarded-Proto https

option forwardfor header X-Forwarded-For if-none

option httplog

default_backend fallback-backend

backend fallback-backend

mode http

http-request return status 404 content-type "text/html" lf-file /etc/haproxy/errors/custom_404.html

CRUD scripts

The api documentation is pretty exhaustive but here are some basic bash scripts.

- Adding a backend with its ACL and routing rule

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

#!/bin/bash

# Usage: ./add_backend.sh BACKEND_NAME SERVER_ADDRESS:PORT ACL_CRITERIA FRONTEND_NAME

if [ -f ./.env ]; then

source ./.env

fi

API_URL="http://localhost:5555"

API_URL_CONFIG="v3/services/haproxy/configuration/version"

API_URL_BACKEND="v3/services/haproxy/configuration/backends"

API_URL_ACL="v3/services/haproxy/configuration/acls"

API_URL_RULES="v3/services/haproxy/configuration/backend_switching_rules"

API_USER="$API_USER"

API_PASS="$API_PASS"

BACKEND_NAME="$1"

SERVER_ADDR="$2"

ACL_CRITERIA="$3"

FRONTEND_NAME="$4"

if [[ -z "$BACKEND_NAME" || -z "$SERVER_ADDR" || -z "$ACL_CRITERIA" || -z "$FRONTEND_NAME" ]]; then

echo "Usage: $0 BACKEND_NAME SERVER_ADDRESS:PORT ACL_CRITERIA FRONTEND_NAME"

exit 1

fi

# The current HAProxy config version

CONFIG_VERSION=$(curl -s -u $API_USER:$API_PASS "$API_URL/$API_URL_CONFIG")

if [[ -z "$CONFIG_VERSION" ]]; then

echo "Failed to get current config version"

exit 1

fi

echo "Current config version: $CONFIG_VERSION"

# 1 Create the backend

curl -s -u $API_USER:$API_PASS -X POST "$API_URL/$API_URL_BACKEND?version=$CONFIG_VERSION" \

-H "Content-Type: application/json" \

-d "{

\"name\": \"$BACKEND_NAME-backend\",

\"mode\": \"http\"

}"

# Increment version

CONFIG_VERSION=$((CONFIG_VERSION + 1))

echo "✅ Backend added"

sleep 5

# 2 Create the server

curl -s -u $API_USER:$API_PASS -X POST "$API_URL/$API_URL_BACKEND/$BACKEND_NAME-backend/servers?version=$CONFIG_VERSION" \

-H "Content-Type: application/json" \

-d "{

\"name\": \"${BACKEND_NAME}-backend\",

\"address\": \"$(echo $SERVER_ADDR | cut -d: -f1)\",

\"port\": $(echo $SERVER_ADDR | cut -d: -f2)

}"

echo "✅ Server added"

# Increment version

CONFIG_VERSION=$((CONFIG_VERSION + 1))

# 3 Add ACL to the frontend

# Find next available ACL index for the frontend

ACL_INDEX=$(curl -s -u "$API_USER:$API_PASS" \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/acls" \

| jq '. | length')

echo "Next available ACL index for frontend $FRONTEND_NAME: $ACL_INDEX"

curl -s -u "$API_USER:$API_PASS" -X POST \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/acls/$ACL_INDEX?version=$CONFIG_VERSION" \

-H "Content-Type: application/json" \

-d "{

\"acl_name\": \"$BACKEND_NAME-acl\",

\"criterion\": \"hdr(host)\",

\"value\": \"$ACL_CRITERIA\"

}"

echo "✅ ACL '$BACKEND_NAME' with criterion '$ACL_CRITERIA' added to frontend '$FRONTEND_NAME'"

# Increment version

CONFIG_VERSION=$((CONFIG_VERSION + 1))

# Find next available rule index for the frontend

RULE_INDEX=$(curl -s -u "$API_USER:$API_PASS" \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/backend_switching_rules" \

| jq 'length')

# 4 Add a use_backend rule to the frontend using the ACL

curl -u $API_USER:$API_PASS -X POST \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/backend_switching_rules/${RULE_INDEX}?version=$CONFIG_VERSION" \

-H "Content-Type: application/json" \

-d "{

\"name\": \"${BACKEND_NAME}-backend\",

\"cond\": \"if\",

\"cond_test\": \"$BACKEND_NAME-acl\",

\"backend\": \"$BACKEND_NAME\"

}"

echo "✅ Routing Rule added"

- Add the internal ACL

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

#!/bin/bash

# Usage: ./add_internalacl.sh FRONTEND_NAME

if [ -f ./.env ]; then

source ./.env

fi

API_URL="http://localhost:5555"

API_URL_CONFIG="v3/services/haproxy/configuration/version"

API_URL_BACKEND="v3/services/haproxy/configuration/backends"

API_URL_ACL="v3/services/haproxy/configuration/acls"

API_URL_RULES="v3/services/haproxy/configuration/backend_switching_rules"

API_USER="$API_USER"

API_PASS="$API_PASS"

FRONTEND_NAME="${1:-http-in}"

# The current HAProxy config version

CONFIG_VERSION=$(curl -s -u $API_USER:$API_PASS "$API_URL/$API_URL_CONFIG")

if [[ -z "$CONFIG_VERSION" ]]; then

echo "Failed to get current config version"

exit 1

fi

echo "Current config version: $CONFIG_VERSION"

# Find next available ACL index for the frontend

ACL_INDEX=$(curl -s -u "$API_USER:$API_PASS" \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/acls" \

| jq '. | length')

echo "Next available ACL index for frontend $FRONTEND_NAME: $ACL_INDEX"

curl -s -u "$API_USER:$API_PASS" -X POST \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/acls/$ACL_INDEX?version=$CONFIG_VERSION" \

-H "Content-Type: application/json" \

-d "{

\"acl_name\": \"internal\",

\"criterion\": \"src\",

\"value\": \"10.10.0.0/16 10.250.0.0/16 192.168.0.0/16 172.16.0.0/12\"

}"

echo "✅ ACL added to frontend '$FRONTEND_NAME'"

- Configuring an existing backend to only be accessible over the intranet

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

#!/bin/bash

# Usage: ./put_internalacl.sh BACKEND_NAME FRONTEND_NAME

if [ -f ./.env ]; then

source ./.env

fi

API_URL="http://localhost:5555"

API_URL_CONFIG="v3/services/haproxy/configuration/version"

API_URL_BACKEND="v3/services/haproxy/configuration/backends"

API_URL_ACL="v3/services/haproxy/configuration/acls"

API_URL_RULES="v3/services/haproxy/configuration/backend_switching_rules"

API_USER="$API_USER"

API_PASS="$API_PASS"

BACKEND_NAME="${1}"

FRONTEND_NAME="${2:-http-in}"

# The current HAProxy config version

CONFIG_VERSION=$(curl -s -u $API_USER:$API_PASS "$API_URL/$API_URL_CONFIG")

if [[ -z "$CONFIG_VERSION" ]]; then

echo "Failed to get current config version"

exit 1

fi

echo "Current config version: $CONFIG_VERSION"

# List existing backend switching rules

RULES_JSON=$(curl -s -u "$API_USER:$API_PASS" \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/backend_switching_rules")

#echo "Current rules: $RULES_JSON"

# Find the rule matching our backend

RULE_INDEX=$(echo "$RULES_JSON" | jq "map(.name==\"$BACKEND_NAME\") | index(true)")

#echo "Current rule index: $RULE_INDEX"

if [[ "$RULE_INDEX" == "null" ]]; then

echo "No existing rule found for backend $BACKEND_NAME"

exit 1

fi

# Get the existing cond_test and append "internal" ACL

EXISTING_COND_TEST=$(echo "$RULES_JSON" | jq -r ".[$RULE_INDEX].cond_test")

echo "Cond test: $EXISTING_COND_TEST"

NEW_COND_TEST="$EXISTING_COND_TEST internal"

echo "New cond test: $NEW_COND_TEST"

# Update the existing rule with the new cond_test

curl -s -u "$API_USER:$API_PASS" -X PUT \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/backend_switching_rules/$RULE_INDEX?version=$CONFIG_VERSION" \

-H "Content-Type: application/json" \

-d "{

\"name\": \"$BACKEND_NAME\",

\"cond\": \"if\",

\"cond_test\": \"$NEW_COND_TEST\",

\"backend\": \"$BACKEND_NAME\"

}"

echo "✅ Internal ACL added to routing rule for $BACKEND_NAME"

- Deleting the backend, its ACLs and corresponding routing rules

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

#!/bin/bash

# Usage: ./delete_backend.sh BACKEND_NAME FRONTEND_NAME

if [ -f ./.env ]; then

source ./.env

fi

API_URL="http://localhost:5555"

API_URL_CONFIG="v3/services/haproxy/configuration/version"

API_URL_BACKEND="v3/services/haproxy/configuration/backends"

API_USER="$API_USER"

API_PASS="$API_PASS"

BACKEND_NAME="$1"

FRONTEND_NAME="${2:-http-in}"

if [[ -z "$BACKEND_NAME" || -z "$FRONTEND_NAME" ]]; then

echo "Usage: $0 BACKEND_NAME FRONTEND_NAME"

exit 1

fi

CONFIG_VERSION=$(curl -s -u $API_USER:$API_PASS "$API_URL/$API_URL_CONFIG")

if [[ -z "$CONFIG_VERSION" ]]; then

echo "Failed to get current config version"

exit 1

fi

echo "Current config version: $CONFIG_VERSION"

# 1. Delete backend switching rules

# Get all rule indexes referencing this backend/ACL

RULE_INDEXES=($(curl -s -u $API_USER:$API_PASS \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/backend_switching_rules" \

| jq -r "to_entries[] | select(.value.cond_test==\"$BACKEND_NAME\") | .key" | sort -nr))

# Delete rules in descending order

for INDEX in "${RULE_INDEXES[@]}"; do

curl -s -u $API_USER:$API_PASS -X DELETE \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/backend_switching_rules/$INDEX?version=$CONFIG_VERSION"

echo "✅ Routing rule at index $INDEX deleted"

CONFIG_VERSION=$((CONFIG_VERSION + 1))

done

# 2. Delete ACLs

ACL_INDEXES=$(curl -s -u $API_USER:$API_PASS \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/acls" \

| jq -r "to_entries[] | select(.value.acl_name==\"$BACKEND_NAME\") | .key")

for INDEX in $ACL_INDEXES; do

curl -s -u $API_USER:$API_PASS -X DELETE \

"$API_URL/v3/services/haproxy/configuration/frontends/$FRONTEND_NAME/acls/$INDEX?version=$CONFIG_VERSION"

echo "✅ ACL at index $INDEX deleted"

CONFIG_VERSION=$((CONFIG_VERSION + 1))

done

# 3. Delete the backend

curl -s -u $API_USER:$API_PASS -X DELETE \

"$API_URL/$API_URL_BACKEND/$BACKEND_NAME?version=$CONFIG_VERSION"

echo "✅ Backend '$BACKEND_NAME' deleted"

Conclusion

This was a wild one. I thought the dataplaneapi was something that would be integrated into some kind of GUI and would take a couple of clicks to set up but boy I was wrong… It turned out a lot more complicated than expected. I think going through this project is definitely a win as I’d been trying to do an HA setup for a while now. Kubernetes would have given a more “out of the box” experience and less time wasted going over the installation. In the end I’m glad I did it regardless because I’m planning on using haproxy as an ingress controller for k8s.

See you on the next one.

Cheers